WELCOME TO THE FUTURE

The AI Race Heats Up: Alibaba's Qwen 2.5 Max Sets a New Standard for Language Models

AI NEWS

Lilo

2/2/2025

## Introduction

In the fast-paced world of artificial intelligence, breakthroughs happen at a dizzying speed. On January 30, 2025, Alibaba Cloud made waves with the release of Qwen 2.5 Max, a large language model (LLM) that boldly claims to outshine industry giants like OpenAI's GPT-4o and DeepSeek-V3. As an AI specialist, I couldn't wait to dive into the details and share my insights with you. In this post, we'll explore what makes Qwen 2.5 Max stand out and why it's a significant milestone in the AI race.

## Key Features and Specifications

Let's start with the technical nitty-gritty. Qwen 2.5 Max boasts an impressive 72-billion parameter Mixture-of-Experts (MoE) architecture, with 64 specialized sub-networks working in harmony. This innovative approach allows for efficient processing and adaptability to various tasks.

But what really caught my eye is the sheer volume of data used for pretraining: a staggering 20 trillion tokens. To put that into perspective, it's like having a library of over 15 million books at your fingertips! This extensive training enables Qwen 2.5 Max to tackle complex queries with ease.

Another standout feature is the expansive context window of 128,000 tokens (approximately 100,000 words). This means Qwen 2.5 Max can maintain coherence and understanding across lengthy documents, making it a valuable tool for researchers and analysts.

But Qwen 2.5 Max isn't just a one-trick pony. Its multimodal capabilities allow it to process text, images, audio, and video inputs. Imagine being able to analyze a 20-minute video or generate SVG code from an image—the possibilities are endless! Plus, with audio input support for 29 languages, Qwen 2.5 Max is poised to make a global impact.

## Performance Comparison

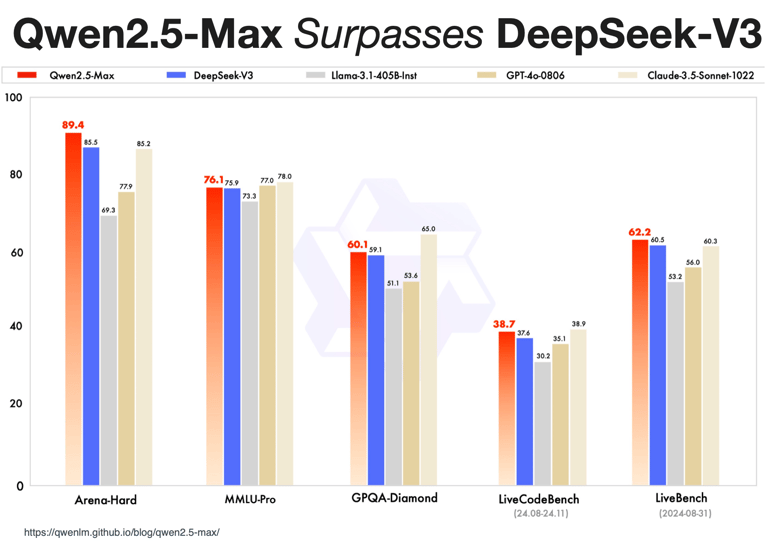

Now, let's talk numbers. Alibaba claims that Qwen 2.5 Max outperforms its rivals across several benchmarks. Take a look at this comparison table:

As you can see, Qwen 2.5 Max holds its own against the competition, particularly in coding performance and context window size. However, the cost per million tokens is higher than DeepSeek-V3, which we'll explore further in the next section.

Alibaba also reports that Qwen 2.5 Max outperforms other models in several key benchmarks, including Arena-Hard, LiveBench, LiveCodeBench, MMLU (Massive Multitask Language Understanding), and GPQA-Diamond. While specific scores aren't available yet, these results suggest advancements in both general knowledge and coding abilities.

## Cost-Effectiveness and Efficiency

Let's address the elephant in the room: pricing. At first glance, Qwen 2.5 Max's cost structure might raise some eyebrows:

- Input tokens: $10 per million

- Output tokens: $30 per million

Compared to GPT-4o ($2.50/M input, $10/M output) and DeepSeek ($0.14/M input, $0.28/M output), Qwen 2.5 Max seems pricey. However, there's more to the story.

Alibaba claims that Qwen 2.5 Max's MoE architecture reduces computational costs by 30% compared to traditional dense models while maintaining high performance. This means that despite the higher per-token cost, the overall efficiency could lead to cost savings in the long run, especially for businesses processing large volumes of data.

## Real-World Applications

So, what can you actually do with Qwen 2.5 Max? The possibilities are vast! Let's explore a few potential use cases:

1. Legal Industry: With its expansive context window, Qwen 2.5 Max can analyze lengthy contracts and legal documents, saving time and resources for law firms and legal departments.

2. Research and Academia: Researchers can leverage Qwen 2.5 Max to process extensive academic papers and datasets, uncovering insights and patterns that might otherwise go unnoticed.

3. Media and Entertainment: Imagine being able to analyze a 20-minute video for content moderation, sentiment analysis, or even generating highlight reels. Qwen 2.5 Max's multimodal capabilities open up exciting opportunities in the media industry.

4. Global Business Communications: With audio input support for 29 languages, Qwen 2.5 Max can break down language barriers and facilitate seamless communication in multinational organizations.

5. Software Development: Qwen 2.5 Max's enhanced coding capabilities can assist developers in writing cleaner, more efficient code and even automate certain programming tasks.

## Insider Insights and Interesting Facts

Now, here's a tidbit that caught my attention: Alibaba chose to release Qwen 2.5 Max on the first day of the Lunar New Year, when most Chinese people are on holiday. This timing suggests that Alibaba was feeling the heat from DeepSeek's recent advancements and wanted to make a splash in the AI market.

It's no secret that Chinese tech giants are locked in an intense battle for AI supremacy, and Alibaba's bold move showcases just how high the stakes are. As an AI enthusiast, I can't help but be excited by this rapid pace of innovation and competition.

## Tips for Potential Users

If you're considering leveraging Qwen 2.5 Max for your business or research, here are a few tips to keep in mind:

1. Evaluate the Performance-Cost Trade-off: While Qwen 2.5 Max offers impressive capabilities, it's crucial to weigh the benefits against the costs and compare it with alternatives like DeepSeek-V3.

2. Exploit Multimodal Capabilities: Don't limit yourself to text-based tasks. Explore how Qwen 2.5 Max's multimodal features can enhance your workflows and provide new insights.

3. Leverage the Large Context Window: If your work involves analyzing lengthy documents or complex datasets, Qwen 2.5 Max's expansive context window could be a game-changer.

4. Experiment with Coding Capabilities: For software developers, Qwen 2.5 Max's enhanced coding performance opens up new possibilities for automation and efficiency.

## Future Projections

The release of Qwen 2.5 Max is more than just a single event; it's a harbinger of the future of AI. Here are some trends I believe we'll see in the coming years:

1. Intensified Competition Among Chinese AI Companies: As Alibaba, DeepSeek, and other Chinese tech giants continue to push the boundaries of AI, we can expect to see more breakthroughs and innovations challenging Western dominance in the field.

2. Emphasis on Efficient Architectures: The success of Qwen 2.5 Max's MoE architecture highlights the growing focus on efficiency in AI model design. I anticipate more research and development in this area to create powerful yet cost-effective solutions.

3. Multimodal AI as the New Norm: Qwen 2.5 Max's multimodal capabilities are just the beginning. As AI models become more versatile in handling diverse data types, we'll see a surge in applications that seamlessly integrate text, images, audio, and video.

4. Democratization of AI: As competition drives down costs and improves accessibility, I believe we'll witness a democratization of AI technology. Small businesses and individuals will have access to powerful tools previously reserved for large corporations and research institutions.

## Conclusion

Alibaba's Qwen 2.5 Max represents a significant leap forward in the AI landscape. Its impressive capabilities, multimodal features, and cost-efficiency make it a strong contender for the title of "most powerful LLM on the market." However, as with any bold claim, independent verification and benchmarking are essential to validate its performance.

One thing is certain: the AI race is heating up, and Qwen 2.5 Max has raised the bar for what we can expect from language models. As the competition intensifies, I have no doubt that we'll see even more remarkable breakthroughs in the near future.

Contact us

Whether you have a request, a query, or want to work with us, use the form below to get in touch

© Copyright King of Automation 2024. All Rights Reserved.