WELCOME TO THE FUTURE

The art of prompt engineering: master your AI interactions

AI RESEARCH

Lilo

1/10/2025

## Introduction

As AI continues to advance at a rapid pace, businesses and individuals are increasingly turning to powerful language models like ChatGPT to automate tasks and streamline workflows. However, getting the most out of these tools requires more than just asking questions – it demands a strategic approach known as prompt engineering.

In this comprehensive guide, I'll walk you through the essentials of prompt engineering, drawing on insights from a groundbreaking research paper by Jules White and his colleagues at Vanderbilt University. By the end, you'll have a clear understanding of what prompt engineering is, why it matters, and how you can start applying it to your own interactions with ChatGPT.

## What is Prompt Engineering?

At its core, prompt engineering is the art and science of crafting effective prompts to guide and refine the outputs of large language models (LLMs) like ChatGPT. It's a way of programming these models to generate more relevant, accurate, and nuanced responses tailored to your specific needs.

Think of it like giving instructions to a highly intelligent but somewhat naive assistant. By providing clear, well-structured prompts, you can help the LLM understand the context, constraints, and desired qualities of the output you're looking for. This, in turn, can lead to more efficient and productive interactions that save time and effort.

## Why Prompt Engineering Matters

So why should you care about prompt engineering? The answer is simple: it's the key to unlocking the full potential of LLMs and transforming the way you work with AI.

As White and his colleagues point out, prompt engineering can enable a wide range of powerful capabilities, such as:

- Creating new interaction paradigms, like simulating a Linux terminal or generating quizzes

- Automating complex processes by generating scripts and artifacts

- Enhancing the quality and accuracy of outputs through self-adaptation and error identification

- Facilitating knowledge transfer across domains by documenting reusable solutions

Without prompt engineering, you're essentially leaving the effectiveness of your LLM interactions up to chance. By taking a more proactive and strategic approach, you can ensure that you're getting the most value out of these powerful tools.

## The Building Blocks of Prompt Engineering

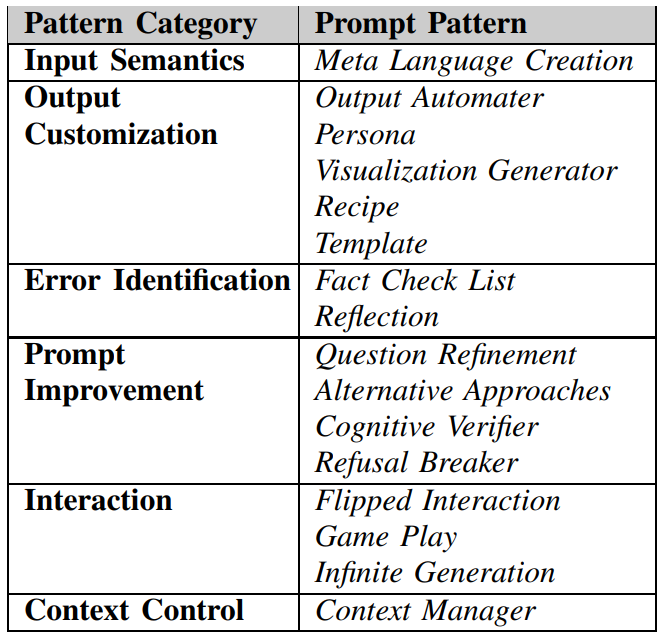

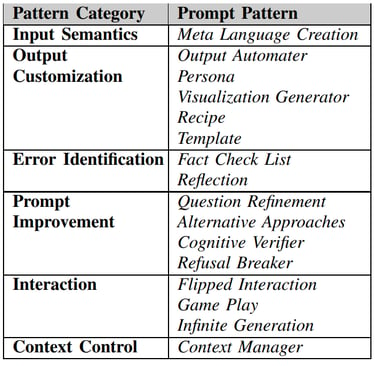

So what does effective prompt engineering look like in practice? According to White and his team, it all comes down to a set of fundamental building blocks known as prompt patterns.

Prompt patterns are reusable solutions to common problems that arise when working with LLMs. They provide a structured way of thinking about prompts and help users develop mental models for interacting with these tools more effectively.

Each prompt pattern consists of several key components:

1. Name and Classification: A descriptive label that identifies the pattern and categorizes it based on its function (e.g., input semantics, output customization, error identification).

2. Intent: A clear statement of the pattern's purpose and the problem it's designed to solve.

3. Motivation: An explanation of why the pattern is needed and how it can benefit users.

4. Structure and Participants: A detailed breakdown of the key ideas and contextual statements that make up the pattern.

5. Example Code: Concrete implementation samples that demonstrate how the pattern can be applied in practice.

6. Consequences: A summary of the pattern's advantages, limitations, and potential risks.

By studying and applying these patterns, you can develop a more systematic approach to prompt engineering that yields consistent, high-quality results.

## Putting Prompt Patterns into Practice

Now that we've covered the basics of prompt patterns, let's take a closer look at some specific examples and how they can be used to enhance your interactions with ChatGPT.

One of the most powerful prompt patterns identified by White and his colleagues is the Meta Language Creation pattern. This pattern allows you to define custom semantics for specific symbols or statements, essentially creating a new language for communicating with the LLM.

For example, you could use this pattern to establish a standardized notation for describing graph structures, like "a → b" to represent a graph with nodes "a" and "b" connected by an edge. By teaching the LLM this notation upfront, you can then use it throughout your conversation to quickly and unambiguously convey complex ideas.

Here's what a sample implementation of the Meta Language Creation pattern might look like:

```

When I provide a graph description, here are the rules:

- A letter represents a node

- An arrow (→) represents a directed edge between nodes

- A dash (-) represents an undirected edge between nodes

- Properties of edges are specified in brackets, like [weight:2]

```

By establishing these rules at the outset, you can then simply write something like "a -[weight:5]→ b" and trust that the LLM will interpret it correctly as a weighted directed graph.

Of course, as with any powerful tool, the Meta Language Creation pattern comes with some risks. If not carefully designed, your custom language could introduce ambiguity or confusion. It's important to use clear, specific terms and establish context to avoid these pitfalls.

### The Output Automater Pattern

Another highly useful prompt pattern is the Output Automater, which instructs the LLM to generate scripts or automation artifacts based on its own suggestions. This can be a huge time-saver when working with multi-step processes or complex workflows.

Let's say you're using ChatGPT to help deploy a Python application to AWS. Rather than manually implementing each step the LLM recommends, you can use the Output Automater pattern to have it generate a ready-to-run script:

```

From now on, whenever you generate code that spans more than one file, generate a Python script that can be run to automatically create the specified files or make changes to existing files to insert the generated code.

```

With this pattern in place, ChatGPT will not only provide the necessary code snippets but also package them into a convenient automation artifact. This can dramatically reduce the effort required to put the LLM's suggestions into practice.

As powerful as the Output Automater pattern is, it's important to use it judiciously. The generated automation artifacts should be reviewed carefully before execution, as LLMs can sometimes produce inaccuracies or unintended consequences. It's also crucial to provide sufficient context for the LLM to generate artifacts that will work in your specific environment.

### The Persona Pattern

A third prompt pattern that can be highly effective is the Persona pattern, which involves assigning a specific role or character to the LLM to guide its responses. By giving the LLM a clear persona to embody, you can help it focus on the most relevant details and generate outputs tailored to your needs.

For example, if you're looking for a security-focused code review, you could use the following prompt:

From now on, act as a security reviewer. Pay close attention to the security details of any code that we look at. Provide outputs that a security reviewer would regarding the code.

With this persona in place, ChatGPT will approach the code review from the perspective of a security expert, highlighting potential vulnerabilities and best practices. This can help ensure that your code is as secure as possible before deployment.

The Persona pattern can be applied to a wide range of roles and contexts, from simulating a Linux terminal to role-playing a customer service agent. The key is to provide clear, specific instructions that help the LLM understand the persona's goals, knowledge, and constraints.

## Combining Prompt Patterns for Maximum Effect

One of the most powerful aspects of prompt patterns is their composability. By combining multiple patterns together, you can create even more sophisticated and effective interactions with ChatGPT.

For example, you could use the Question Refinement pattern in conjunction with the Cognitive Verifier pattern to have the LLM not only suggest improvements to your questions but also generate a series of follow-up questions to help arrive at a more comprehensive answer:

From now on, whenever I ask a question, ask four additional questions that would help you produce a better version of my original question. Then, use my answers to suggest a better version of my original question. After the follow-up questions, temporarily act as a user with no knowledge of AWS and define any terms that I need to know to accurately answer the questions.

By chaining these patterns together, you can create a more interactive and adaptive conversation that gradually builds toward a high-quality output.

Of course, combining prompt patterns is not without its challenges. As you layer on more complexity, there's a greater risk of introducing errors or unexpected behaviors. It's important to test your prompts thoroughly and monitor the LLM's responses to ensure they align with your intended outcomes.

## The Future of Prompt Engineering

As LLMs continue to evolve and expand their capabilities, the field of prompt engineering is poised for significant growth and innovation. Researchers like White and his colleagues are just beginning to scratch the surface of what's possible with these powerful tools.

Looking ahead, we can expect to see the development of more sophisticated prompt patterns tailored to specific domains and use cases. From generating legal contracts to designing complex systems, prompt engineering has the potential to transform the way we work with AI across a wide range of industries.

At the same time, there will likely be a growing need for collaboration and knowledge sharing among prompt engineers. As the field matures, establishing best practices and standard frameworks will be crucial to ensuring the reliability and reproducibility of prompt-driven interactions.

## Conclusion: Embrace the Power of Prompt Engineering

Prompt engineering is a rapidly evolving field with immense potential to transform the way we interact with AI. By mastering the art of crafting effective prompts, you can unlock new levels of productivity, creativity, and insight in your work with ChatGPT and other LLMs.

Whether you're a software developer looking to automate complex workflows, a business leader seeking to streamline customer service, or a researcher exploring new frontiers of AI, prompt engineering is an essential skill to have in your toolkit.

Contact us

Whether you have a request, a query, or want to work with us, use the form below to get in touch

© Copyright King of Automation 2024. All Rights Reserved.